Unlock Face Recognition: Keras & TensorFlow Siamese Power

Unlock Face Recognition: Keras & TensorFlow Siamese Power

Introduction to Face Recognition with Siamese Networks

Welcome, guys, to the fascinating world of face recognition , a technology that’s no longer just sci-fi but an integral part of our daily lives, from unlocking our phones to securing high-tech facilities. But have you ever wondered how these systems work, especially when they need to identify someone from just a single glance or a limited set of data? This is where Siamese Networks truly shine, offering a powerful and elegant solution to what’s known as the “one-shot learning” problem. In this article, we’re going to dive deep into how you can leverage the incredible capabilities of Siamese Networks for building robust face recognition systems, all powered by the dynamic duo of Keras and TensorFlow . We’ll explore the underlying principles, walk through the architecture, and even touch upon the practical steps to get your own system up and running. Get ready to embark on a journey that combines cutting-edge deep learning with real-world applications, making complex concepts accessible and exciting for everyone interested in computer vision and artificial intelligence.

Table of Contents

- Introduction to Face Recognition with Siamese Networks

- What is Face Recognition Anyway?

- Why Siamese Networks for Facial Identification?

- Keras & TensorFlow: The Ultimate Deep Learning Duo

- Unpacking the Power of Siamese Networks

- How Siamese Networks Actually Work

- The Magic of One-Shot Learning with Siamese Networks

- Understanding the Triplet Loss Function

- Building Your Own Face Recognition System

- Setting Up Your Deep Learning Environment

- Data Preparation: The Foundation of Success

- Designing the Siamese Network Architecture

- Training Your Siamese Model with Keras and TensorFlow

- Evaluating Performance and Fine-Tuning Your System

- Real-World Applications and Ethical Considerations

- The Broad Spectrum of Face Recognition Applications

- Navigating the Ethical Maze of Facial Recognition

- Conclusion: Your Journey into Face Recognition Mastery

What is Face Recognition Anyway?

So, what exactly is face recognition ? At its core, face recognition is a technology capable of identifying or verifying a person from a digital image or a video frame. It’s a specialized area within computer vision that goes beyond merely detecting a face; it’s about understanding who that face belongs to. Think of it like this: face detection simply tells you there’s a face in the picture, drawing a bounding box around it. Face recognition , however, takes that detected face and attempts to match it against a database of known faces, or determine if two different images belong to the same person. This process is inherently complex because human faces are incredibly dynamic. Factors like varying lighting conditions, different poses, changes in expression, aging, and even accessories like glasses or beards can drastically alter a face’s appearance. Traditional methods often struggled with this variability, but thanks to the advent of deep learning and powerful frameworks like Keras and TensorFlow , we can now build systems that learn to extract invariant features, making them highly resilient to these challenges. The goal is to create a unique biometric signature for each individual, enabling seamless and accurate identification across various scenarios, from security checkpoints to personalized user experiences on our devices. Understanding these nuances is crucial for appreciating why specialized architectures like Siamese Networks are so vital in achieving high-performance face recognition.

Why Siamese Networks for Facial Identification?

Now, you might be asking, “Why Siamese Networks specifically for face recognition ?” Well, here’s the game-changer: traditional classification models, which categorize inputs into predefined classes, often require a massive amount of data per class to learn effectively. Imagine needing hundreds or thousands of photos for each person you want your face recognition system to identify. That’s simply not practical for many real-world applications, especially when dealing with a growing number of users. This is where the magic of Siamese Networks comes into play. They are specifically designed for one-shot learning or few-shot learning , meaning they can learn to differentiate between classes—or in our case, individuals—with only one or very few examples per class. Instead of classifying a face into a specific ‘person A’ or ‘person B’ category, a Siamese Network learns a similarity metric . It essentially tells you how similar two faces are . This is incredibly powerful for face recognition because you can enroll a new person into your system with just a single reference image. Later, when an unknown face appears, the network compares it to the stored reference. If the similarity score is high enough, bingo – it’s a match! This approach dramatically reduces the data requirements and makes the system much more scalable and adaptable to new identities without needing to retrain the entire model every time. This unique capability makes Siamese Networks an invaluable tool in the realm of modern face recognition, far surpassing the limitations of conventional classifiers for this specific task.

Keras & TensorFlow: The Ultimate Deep Learning Duo

Alright, folks, let’s talk about the tools that make all this deep learning wizardry possible: Keras and TensorFlow . If you’re diving into deep learning , this dynamic duo is your ultimate toolkit, especially when building sophisticated systems like face recognition with Siamese Networks . TensorFlow , developed by Google, serves as the robust, high-performance backend. It’s the powerful engine that handles all the complex mathematical operations, distributed computing, and GPU acceleration required for training massive neural networks . Think of it as the foundational powerhouse, providing the low-level API and the computational graph execution. However, directly working with raw TensorFlow can sometimes feel a bit like writing in assembly language – incredibly powerful, but also quite verbose and intricate. This is where Keras steps in as your friendly, high-level API. Keras acts as a user-friendly interface built on top of TensorFlow (among other backends). It simplifies the process of defining, training, and evaluating deep learning models, making neural network development intuitive and efficient. With Keras , you can build complex architectures, including the Siamese Networks we’re discussing, with just a few lines of code. It abstracts away much of the underlying complexity of TensorFlow, allowing you to focus on the model’s design and experimentation rather than getting bogged down in low-level implementation details. The synergy between Keras ’s ease of use and TensorFlow ’s raw power creates an unbeatable combination for anyone looking to develop cutting-edge face recognition systems. It means you can rapidly prototype, iterate, and deploy your models, making deep learning accessible to a broader audience of developers and researchers.

Unpacking the Power of Siamese Networks

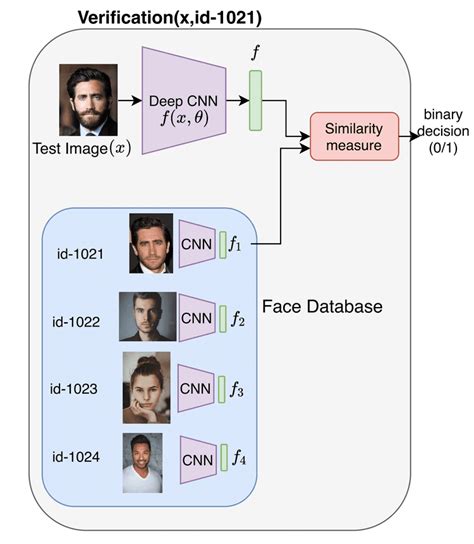

Now that we’ve grasped the “why” behind Siamese Networks for face recognition , let’s peel back the layers and truly understand the “how.” At their core, Siamese Networks are a type of neural network architecture designed to learn a similarity function between two inputs. Unlike traditional networks that classify an input into one of many categories, Siamese Networks are all about comparison. Imagine having two identical twins standing side-by-side, each wearing a different outfit but inherently being the same person. That’s the essence of a Siamese Network – it uses two (or more) identical sub-networks , often convolutional neural networks (CNNs), which share the exact same weights and architecture. This shared-weight approach is absolutely critical because it ensures that both sub-networks learn the same feature representation, or embedding , for their respective inputs. When you feed two images into the network, each sub-network processes its image independently, generating a dense feature vector (a numerical representation) for each. These vectors are essentially the network’s understanding of the most important characteristics of the face in each image. The power then comes from comparing these two output vectors using a distance metric . The goal during training is to adjust the shared weights such that the embeddings of very similar faces (like two different photos of the same person) are mapped very close to each other in this embedding space , resulting in a small distance. Conversely, the embeddings of dissimilar faces (photos of different people) should be pushed far apart, leading to a large distance. This fundamental mechanism allows the network to learn a robust similarity measure, which is the cornerstone for effective face recognition and one-shot learning capabilities.

How Siamese Networks Actually Work

Let’s get into the nitty-gritty of

how Siamese Networks actually work

, because understanding this mechanism is key to building successful

face recognition

systems. Picture this: you have two input images – say,

Image A

and

Image B

. These images are fed into two distinct, yet

identical

, branches of a neural network. This isn’t just a convenient design choice; the

shared weights

across these branches are fundamental. They ensure that both branches perform the

exact same transformations

and extract features in the same way, meaning that if you feed the same image into both branches, they will produce identical

feature vectors

or

embeddings

. Each branch is typically a powerful

Convolutional Neural Network (CNN)

, perhaps a pre-trained model like a modified VGG16, ResNet, or InceptionV3, or even a custom architecture you design. This CNN acts as a sophisticated feature extractor, reducing the high-dimensional image data into a compact, numerical

embedding vector

(e.g., a 128-dimensional vector). Once both images have been processed, and you have

embedding A

and

embedding B

, the next crucial step is to compare them. This comparison is typically done using a

distance metric

, commonly the

Euclidean distance

or

cosine similarity

. The Euclidean distance calculates the straight-line distance between the two vectors in the embedding space. A smaller distance implies greater similarity, while a larger distance indicates dissimilarity. During training, the network is optimized to minimize the distance between embeddings of truly similar pairs (e.g., two images of the same person) and maximize the distance between embeddings of dissimilar pairs (e.g., images of different people). This direct learning of a

similarity function

through distance comparison is what makes

Siamese Networks

so incredibly effective for tasks like

face verification

and

face identification

, especially where

one-shot learning

is a requirement. It’s a truly ingenious way to teach a machine about

similarity

rather than just

classification

.

The Magic of One-Shot Learning with Siamese Networks

Let’s talk about the real game-changer when it comes to

Siamese Networks

and

face recognition

: the unparalleled power of

one-shot learning

. Traditionally, if you wanted a neural network to identify a new person, you’d typically need to retrain at least part of your model with numerous examples of that person’s face. This is resource-intensive and impractical for systems that constantly add new users. But

Siamese Networks

totally flip this paradigm. With

one-shot learning

, you can introduce a

new identity

to your system with just

a single example image

– yes, you heard that right,

one single photo

! Here’s how the magic happens: once your Siamese Network has been trained to learn a robust

similarity metric

(i.e., it knows what features make faces similar or different), it can generate an

embedding

for any given face. When you enroll a new person, you simply take their single reference image, pass it through one branch of your

trained

Siamese Network, and store the resulting

embedding vector

in your database, labeled with that person’s identity. Later, when an unknown face appears and you want to identify it, you generate its

embedding

in the same way. Then, instead of classifying it into a fixed set of categories, you

compare

this new embedding to

all

the stored embeddings in your database using your chosen distance metric. If the distance to any stored embedding falls below a predefined

threshold

, you’ve found a match! This means your system can identify or verify new individuals

without any further training

. It’s not learning new categories; it’s just doing smart comparisons in its learned

embedding space

. This capability is paramount for

face recognition

in dynamic environments like employee access systems, customer identification, or even personal photo organization, making

Siamese Networks

a truly revolutionary approach for scalable and adaptable biometric identification systems. It’s truly a

super powerful

concept that simplifies the integration of new users dramatically.

Understanding the Triplet Loss Function

When we’re talking about training

Siamese Networks

for

face recognition

, one of the most

crucial components

for effective learning is the

Triplet Loss function

. Unlike traditional loss functions that compare a prediction to a single target, Triplet Loss works with

triplets

of images: an

anchor

image, a

positive

image, and a

negative

image. Let’s break down what each means, guys: the

anchor

(

A

) is a reference image of a specific person. The

positive

(

P

) image is

another different image of the same person

as the anchor. And the

negative

(

N

) image is an image of

a completely different person

from the anchor. The entire objective of the Triplet Loss is to ensure that the

distance

between the anchor and the positive image (representing the same person) is

smaller

than the distance between the anchor and the negative image (representing a different person). Mathematically, we want to achieve:

distance(A, P) + margin < distance(A, N)

. The

margin

here is a crucial hyperparameter; it’s a small, positive value that forces a clear separation between similar and dissimilar pairs. It ensures that the positive pair isn’t just closer, but

significantly closer

than the negative pair, creating a robust separation boundary in the

embedding space

. If the condition isn’t met, the loss function penalizes the network, guiding its

optimization

process. This loss function is incredibly effective because it directly pushes the embeddings of similar faces closer together while simultaneously pulling the embeddings of dissimilar faces further apart. This structured comparison ensures that the network learns to differentiate faces with high accuracy, making it ideal for the nuanced task of

face recognition

where small variations can make a big difference. Without a properly implemented and understood

triplet loss

, a Siamese Network for face recognition wouldn’t be able to learn the powerful discriminative embeddings necessary for

one-shot learning

and accurate identification.

Building Your Own Face Recognition System

Alright, folks, it’s time to roll up our sleeves and talk about actually building your very own face recognition system using Siamese Networks with Keras and TensorFlow . This isn’t just theoretical; we’re going to cover the practical steps you’d take from setting up your environment to training your model. The journey involves several critical stages, each demanding careful attention to detail for a robust and accurate system. We’ll start by ensuring your development environment is perfectly configured, because a good foundation is half the battle. Then, we’ll dive into the often-underestimated importance of data preparation , discussing how to gather, augment, and structure your dataset to maximize your model’s learning potential. Following that, we’ll tackle the exciting part: designing the Siamese Network architecture itself, making choices that will define its feature extraction capabilities. Finally, we’ll walk through the process of training your model using the intuitive Keras API and evaluating its performance to ensure it meets your expectations. This hands-on approach will equip you with the knowledge and confidence to implement your own powerful face recognition solution, transforming raw image data into intelligent biometric identification capabilities. So, let’s get into the specifics and demystify the process step-by-step.

Setting Up Your Deep Learning Environment

Before you can start building an amazing

face recognition system

with

Siamese Networks

in

Keras

and

TensorFlow

, the

first and foremost step

is to get your deep learning environment properly set up. Trust me, guys, a solid foundation prevents a lot of headaches down the road! You’ll need

Python

installed, ideally version 3.7 or newer, as it’s the language of choice for most deep learning development. Next, the absolute cornerstone is

TensorFlow

. For optimal performance, especially with large datasets and complex

neural networks

, you’ll want to install the

tensorflow-gpu

package if you have a compatible NVIDIA graphics card and CUDA toolkit; this will significantly speed up your training times. If not, the CPU version (

tensorflow

) works just fine, though it will be slower. Since

Keras

is now integrated directly into

TensorFlow

(as

tf.keras

), you don’t need a separate Keras installation. Beyond TensorFlow, you’ll definitely need

Numpy

for numerical operations,

Matplotlib

for visualizing data and training progress, and

OpenCV

(

cv2

) for image processing tasks like loading images, resizing, and potentially

face detection

or

alignment

pre-processing. A virtual environment (using

venv

or

conda

) is highly recommended to keep your project dependencies isolated and clean. Getting your tools ready is

the essential first step

, allowing you to focus purely on the model development without compatibility issues slowing you down. A well-configured environment ensures a smooth development workflow, letting you unleash the full power of

Keras

and

TensorFlow

on your

face recognition

project.

Data Preparation: The Foundation of Success

When it comes to building a high-performing

face recognition system

using

Siamese Networks

, I cannot stress this enough, folks:

data preparation is absolutely critical and often the most time-consuming part

. A

good face dataset

is the bedrock upon which your model’s accuracy and robustness will be built. You need a collection of images that represents the diversity your system will encounter in the real world, including variations in lighting, pose, expression, age, and background. Merely collecting images isn’t enough; you’ll likely need to perform

data augmentation

to artificially expand your dataset and make your model more resilient to these real-world variations. Techniques like random rotations, flips (horizontal), slight zooms, brightness adjustments, and color jitters can significantly improve generalization. Moreover,

face alignment

is a crucial pre-processing step. This involves detecting facial landmarks (like eyes, nose, mouth) and then geometrically transforming the image so that all faces are consistently positioned and scaled, reducing unwanted variability. Finally, and perhaps most importantly for

Siamese Networks

that use

triplet loss

, you need to efficiently

generate triplets

(

anchor

,

positive

,

negative

) from your dataset. This isn’t trivial; you can’t just pick random negatives because

hard negatives

(faces of different people that are visually similar to the anchor) are vital for effective training. Strategies like online triplet mining or pre-computing triplets that violate the margin constraint are often employed. The quality and thoughtful preparation of your data will directly impact how well your

Siamese Network

learns to differentiate between similar and dissimilar faces, making it the true foundation of your

face recognition

system’s success. Don’t skimp on this step; it’s where your model truly learns to shine.

Designing the Siamese Network Architecture

Now we get to the exciting part, guys:

designing the Siamese Network architecture

itself, the brain behind your

face recognition system

. Remember, a Siamese Network consists of two (or more) identical subnetworks that share weights. The core task of each subnetwork is to act as a

powerful feature extractor

, transforming a raw face image into a compact, discriminative

embedding vector

. A common approach is to use a

Convolutional Neural Network (CNN)

as the backbone for this feature extraction. You could start with proven architectures that have demonstrated strong performance in

computer vision

tasks, such as a modified VGG16, ResNet-50, or InceptionV3, often pre-trained on a large dataset like ImageNet. Using a pre-trained model allows you to leverage learned features and then fine-tune it for faces, a technique known as

transfer learning

. Alternatively, you can design a custom CNN architecture with a series of

convolutional layers

,

pooling layers

(like MaxPooling), and

activation functions

(commonly

ReLU

) to progressively extract hierarchical features. The final layers of this CNN backbone would typically consist of

dense layers

that map the high-level features down to a fixed-size

embedding vector

. The

embedding size

(e.g., 128, 256, or 512 dimensions) is an important hyperparameter, influencing the granularity of similarity detection. A larger embedding might capture more detail but could be more prone to overfitting. It’s crucial that this output layer is followed by a

L2 normalization

step, which ensures that all embedding vectors lie on a unit sphere, making distance comparisons more consistent. The goal is to design a network that effectively collapses the complex information of a face into a unique, low-dimensional representation, where geometrically similar faces are close together in the

embedding space

. This powerful feature extractor is the key component that enables your

Siamese Network

to perform accurate

one-shot learning

for

face recognition

.

Training Your Siamese Model with Keras and TensorFlow

Once you’ve meticulously prepared your data and designed your

Siamese Network architecture

, it’s time for the rubber to meet the road:

training your Siamese model with

Keras

and

TensorFlow

. This is where your model learns to extract meaningful embeddings for

face recognition

. First, you’ll define the

base CNN model

(your feature extractor) using

tf.keras.applications

for pre-trained models or by building a custom

Sequential

or

Functional

model. The critical part is creating the

Siamese model

itself. You’ll set up two

Input

layers in Keras, corresponding to your anchor and positive/negative images. Then, you’ll pass these inputs through your

single base CNN model

, effectively sharing its weights. The outputs of these two branches are the embeddings. The real trick here, guys, is implementing the

triplet loss function

. Since

triplet loss

isn’t a standard

tf.keras.losses

function that takes only

y_true

and

y_pred

, you’ll often need to

implement it as a custom loss function

within Keras or create a custom training loop. This custom loss will take the

anchor

,

positive

, and

negative

embeddings as input and compute the loss based on the margin constraint we discussed earlier. Once your model’s architecture is defined and the loss function is ready, you’ll

compile

your model. Choose an appropriate

optimizer

, with

Adam

being a popular and effective choice, and specify your custom

triplet_loss

. Then, you’ll use

model.fit()

to start the training process, feeding it your generated

triplets

. Pay close attention to

batch size

,

epochs

, and potentially

learning rate schedules

or callbacks (like

ReduceLROnPlateau

) to optimize training. Monitoring the loss convergence and potentially some custom metrics (like

accuracy

on a verification set) during training is crucial. Successful training will result in a model that can generate highly discriminative embeddings, making your

face recognition

system incredibly effective and precise. This iterative process of training, evaluating, and fine-tuning is what makes your model truly powerful.

Evaluating Performance and Fine-Tuning Your System

After investing all that effort into data preparation and training, the next crucial phase is

evaluating performance and fine-tuning your

face recognition system

. This isn’t just about getting a loss value; it’s about understanding how well your

Siamese Network

truly performs in identifying and verifying faces. The primary metric for

face verification accuracy

is typically calculated by taking pairs of images (some truly matching, some non-matching), computing the distance between their embeddings, and then determining if that distance falls below a certain

threshold

. You’ll assess metrics like True Positive Rate (TPR), False Positive Rate (FPR), and the Equal Error Rate (EER), which is where TPR equals FPR. For

face identification

, you might test how accurately the system can identify an unknown face from a database of many known individuals.

Determining the optimal

thresholding

value

is absolutely key here; it balances the trade-off between false positives (incorrectly identifying someone) and false negatives (failing to identify a known person). A receiver operating characteristic (ROC) curve can be very useful for this. If your initial results aren’t stellar, it’s time for

fine-tuning

. Common pitfalls include insufficient training data, a suboptimal network architecture, or an improperly chosen

margin

for the

triplet loss

. Strategies for

fine-tuning

include adjusting the

learning rate

(perhaps starting with a higher rate and gradually reducing it), experimenting with different

optimizers

, modifying the

network architecture

(e.g., adding more layers, changing activation functions, or trying a different pre-trained backbone), and critically, improving your data quality or triplet mining strategy. You might also consider techniques like

hard example mining

during training to focus on the triplets that are hardest for the model to learn.

It’s all about iterative improvement

, guys. By systematically evaluating your model’s performance and intelligently

fine-tuning

its various components, you can significantly boost the accuracy and robustness of your

Keras

and

TensorFlow

-powered

face recognition

system, moving it closer to real-world deployment readiness.

Real-World Applications and Ethical Considerations

As we wrap up our deep dive into face recognition with Siamese Networks , it’s crucial to broaden our perspective and consider the real-world applications and, just as importantly, the ethical considerations that come with deploying such powerful technology. This isn’t just an academic exercise, folks; these systems are increasingly intertwined with our daily lives, from personal convenience to national security. Understanding where and how this technology is used, along with the responsibilities that come with its deployment, is paramount for anyone involved in AI development. The potential benefits are immense, offering enhanced security, personalized experiences, and greater efficiency across countless industries. However, the capabilities of face recognition also raise significant questions about privacy, bias, and the potential for misuse. As creators and users of these systems, we have a collective responsibility to approach their development and implementation with a strong ethical framework, ensuring they serve humanity positively and equitably. Let’s explore the exciting possibilities and the challenging dilemmas posed by this cutting-edge technology.

The Broad Spectrum of Face Recognition Applications

The applications of

face recognition

powered by

Siamese Networks

are truly vast and continue to expand, touching nearly every facet of modern life. One of the most prominent uses is in

security systems

and

access control

. Imagine secure facilities where traditional keycards or fingerprint scanners are replaced or augmented by a seamless facial scan, allowing authorized personnel quick entry while maintaining high levels of security. Your own

mobile device unlock

feature often leverages simplified versions of this technology for convenient and secure access. Beyond security,

face recognition

is making waves in

personalization

. Retailers might use it to recognize returning customers, offering

personalized experiences

and tailored recommendations. In entertainment, it can be used for attendance tracking at events or even to analyze audience engagement. Law enforcement agencies utilize it for identifying suspects or locating missing persons, though this is where some of the ethical debates become most pronounced. Furthermore, in the healthcare sector,

face recognition

can assist in identifying patients, managing medical records, or even tracking vital signs without physical contact. The system’s ability to perform

one-shot learning

means that adding new individuals to these systems is efficient and scalable, making it an attractive option for large organizations and dynamic user bases. The potential is immense, guys, ranging from enhancing urban security with intelligent surveillance to streamlining everyday interactions and creating truly smart environments. It’s a testament to the versatility and power of

deep learning

when applied to

computer vision

challenges, with

Siamese Networks

providing a unique and robust solution for identification tasks.

Navigating the Ethical Maze of Facial Recognition

While the technological prowess of

face recognition

is undeniable, especially when built with robust tools like

Siamese Networks

in

Keras

and

TensorFlow

, it’s absolutely crucial that we, as developers and users, address the

ethical considerations

that come with such powerful capabilities. The field of

ethical AI

is more important than ever. The most significant concern, folks, revolves around

privacy concerns

. The ability to identify individuals from public spaces without their explicit consent raises serious questions about surveillance and personal freedom. Who owns this data? How is it stored? And who has access to it? These are questions that demand transparent answers. Another major challenge is

bias

in

AI

systems. If the training data used for your

face recognition

model (even a

Siamese Network

) is not diverse enough – perhaps lacking representation of certain demographics, skin tones, or genders – the system can exhibit significant

bias

, leading to higher error rates for underrepresented groups. This can have real-world consequences, from incorrect arrests to denial of services. Ensuring fairness and preventing algorithmic discrimination must be a top priority. Moreover,

data security

is paramount.

Face recognition

templates are sensitive biometric data; a breach could have severe implications for individuals. We need robust encryption and secure storage practices. Finally, the potential for misuse, such as mass surveillance or tracking, cannot be ignored. As builders of these systems, we have a responsibility to not only develop effective technology but also to advocate for responsible

AI development

, clear regulations, and thoughtful implementation guidelines. We need to foster a public dialogue about the appropriate use of

facial recognition

and strive to create systems that uphold human rights and societal values. It’s not enough to build it; we must build it wisely and ethically, always considering the broader impact on society.

Conclusion: Your Journey into Face Recognition Mastery

Congratulations, folks! You’ve just taken a deep dive into the exciting and complex world of

face recognition

, particularly focusing on the ingenious architecture of

Siamese Networks

and their powerful implementation using

Keras

and

TensorFlow

. We’ve journeyed from understanding the fundamental concepts of face recognition and why traditional methods fall short, to appreciating how Siamese Networks, with their unique

one-shot learning

capabilities and reliance on the

triplet loss function

, offer an elegant solution to identifying individuals with minimal data. You’ve also gained insights into the practical steps involved in building such a system, from setting up your deep learning environment and meticulously preparing your data to designing the network architecture, training your model, and rigorously evaluating its performance. We’ve even touched upon the diverse, real-world

applications

of this technology, reminding ourselves of its transformative potential across industries. Crucially, we also navigated the critical

ethical considerations

that accompany

face recognition

, highlighting the importance of privacy, fairness, and responsible AI development. This field is rapidly evolving, and with the knowledge you’ve acquired today, you’re now well-equipped to not only understand but also contribute to this cutting-edge domain. Whether you’re aiming to build a secure access system, enhance user experiences, or simply explore the frontiers of

computer vision

, the combination of

Siamese Networks

,

Keras

, and

TensorFlow

provides a robust and flexible framework. So go forth, experiment, and continue your learning journey. The future of intelligent systems is in your hands, and it’s an incredibly exciting place to be! Keep building, keep learning, and keep innovating – responsibly!